3.1. Comparison of CPU/GPU time required to achieve SS by Python and... | Download Scientific Diagram

Amazon.co.jp: Hands-On GPU Computing with Python: Explore the capabilities of GPUs for solving high performance computational problems : Bandyopadhyay, Avimanyu: Foreign Language Books

Amazon | Practical GPU Graphics with wgpu-py and Python: Creating Advanced Graphics on Native Devices and the Web Using wgpu-py: the Next-Generation GPU API for Python | Xu, Jack | Graphics &

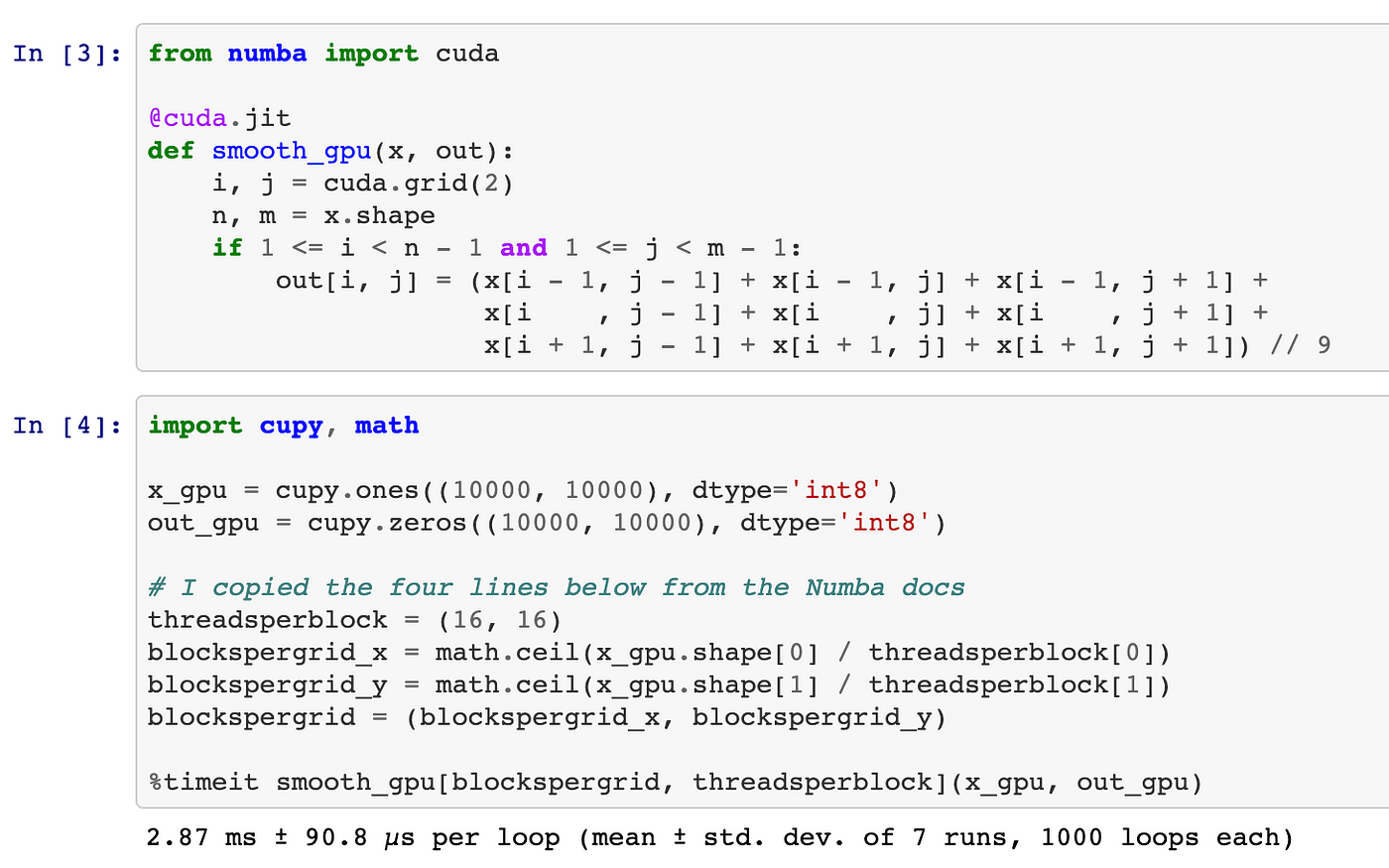

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

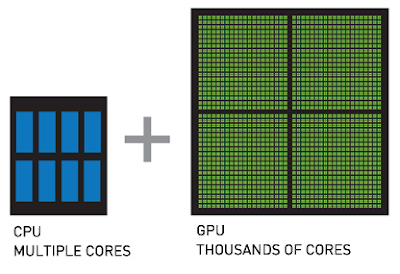

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

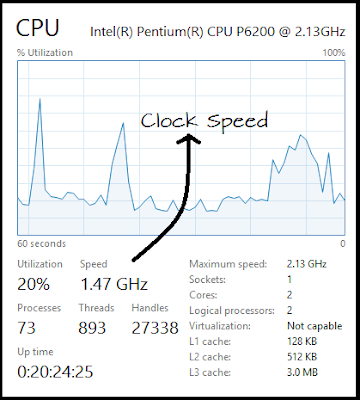

How to Set Up Nvidia GPU-Enabled Deep Learning Development Environment with Python, Keras and TensorFlow

Executing a Python Script on GPU Using CUDA and Numba in Windows 10 | by Nickson Joram | Geek Culture | Medium

Warp: Accelerate Python Frameworks for Simulation and Graphics utilizing Multi-GPU Technology | NVIDIA On-Demand